Introducing NSED: The Agentic Engine breaking RAM shortage

Technical pre-print: https://arxiv.org/abs/2601.16863

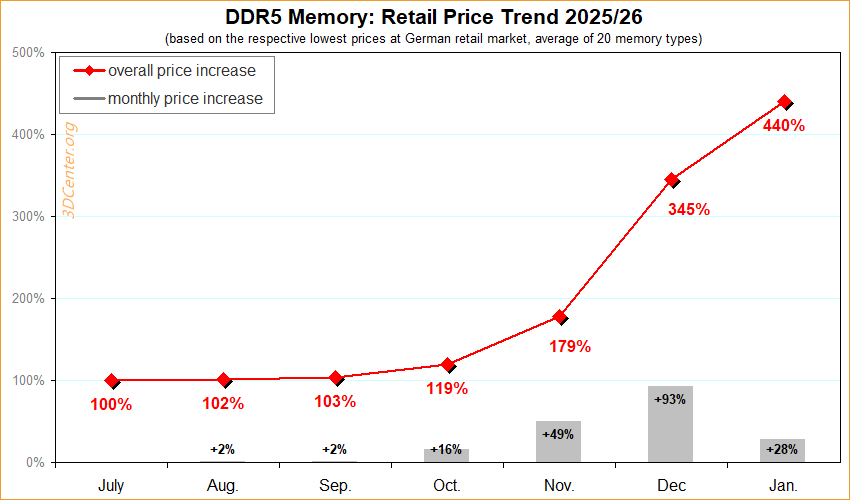

In January 2026, the price of running SOTA AI didn't just go up—it broke. With RAM costs up +440% and supply effectively nationalized by hyperscalers, the era of the 'Monolithic Model' has exhausted itself.

For the rest of us—researchers, startups, and security firms—running a monolithic 70B+ parameter model in-house has transitioned from "expensive" to "prohibitive".

This centralizes intelligence. It makes high-fidelity reasoning the exclusive domain of those with $300k server racks.

At Peeramid Labs, we rejected this premise. We asked a different question:

Can we trade bandwidth for time?

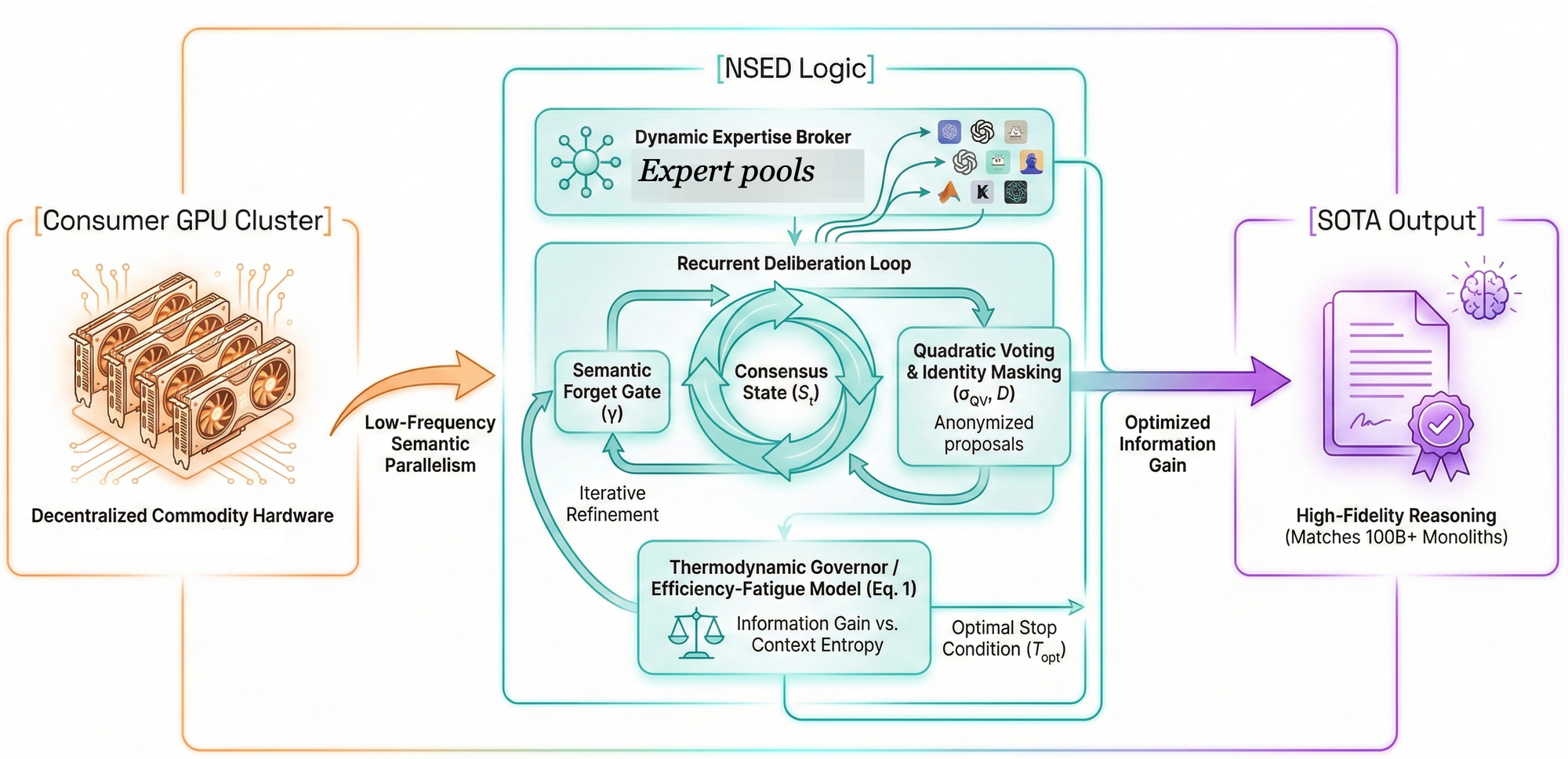

Today, we are introducing the answer: Mixture-of-Models (MoM) architecture pioneered by NSED protocol.

NSED (N-Way Self-Evaluating Deliberation)

A protocol that allows disjointed clusters of consumer-grade hardware to match the reasoning performance of monolithic giants.

The Thermodynamics of Truth

Most LLMs today operate in "System 1" mode—instinctive, fast, and often hallucination-prone. "Thinking" models introduced last year are "System 2" reasoning - they verify own outputs to attempt self-correction. This is step in right direction, but models still are locked within same knowledge space and hidden process.

What we found that intelligence isn't just a function of parameter count or inference time; it's a function of topology.

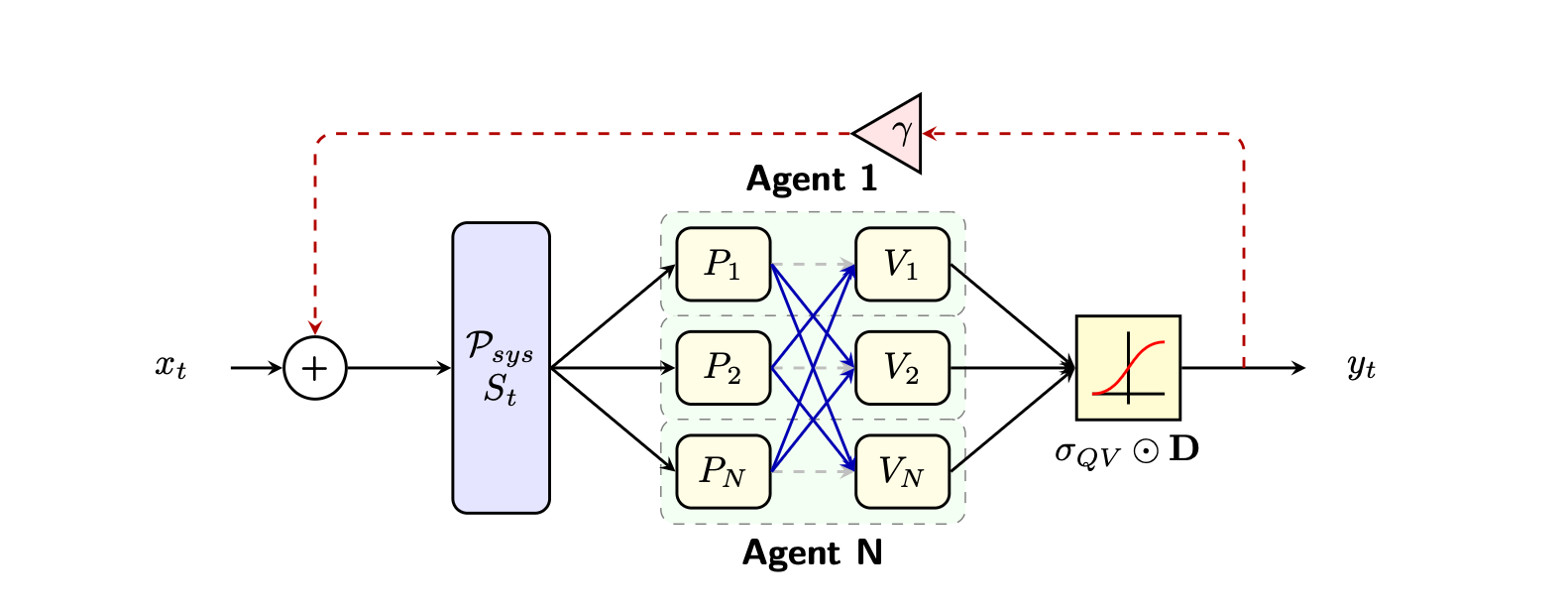

We formalized a new architecture: the Macro-Scale Semantic Recurrent Neural Network (SRNN).

Instead of one massive model generating an answer, NSED utilizes a swarm of smaller, specialized agents (running from consumer cards like the RTX 4090 up to enterprise servers on H100s or B200s) that debate, verify, and refine an answer over multiple rounds.

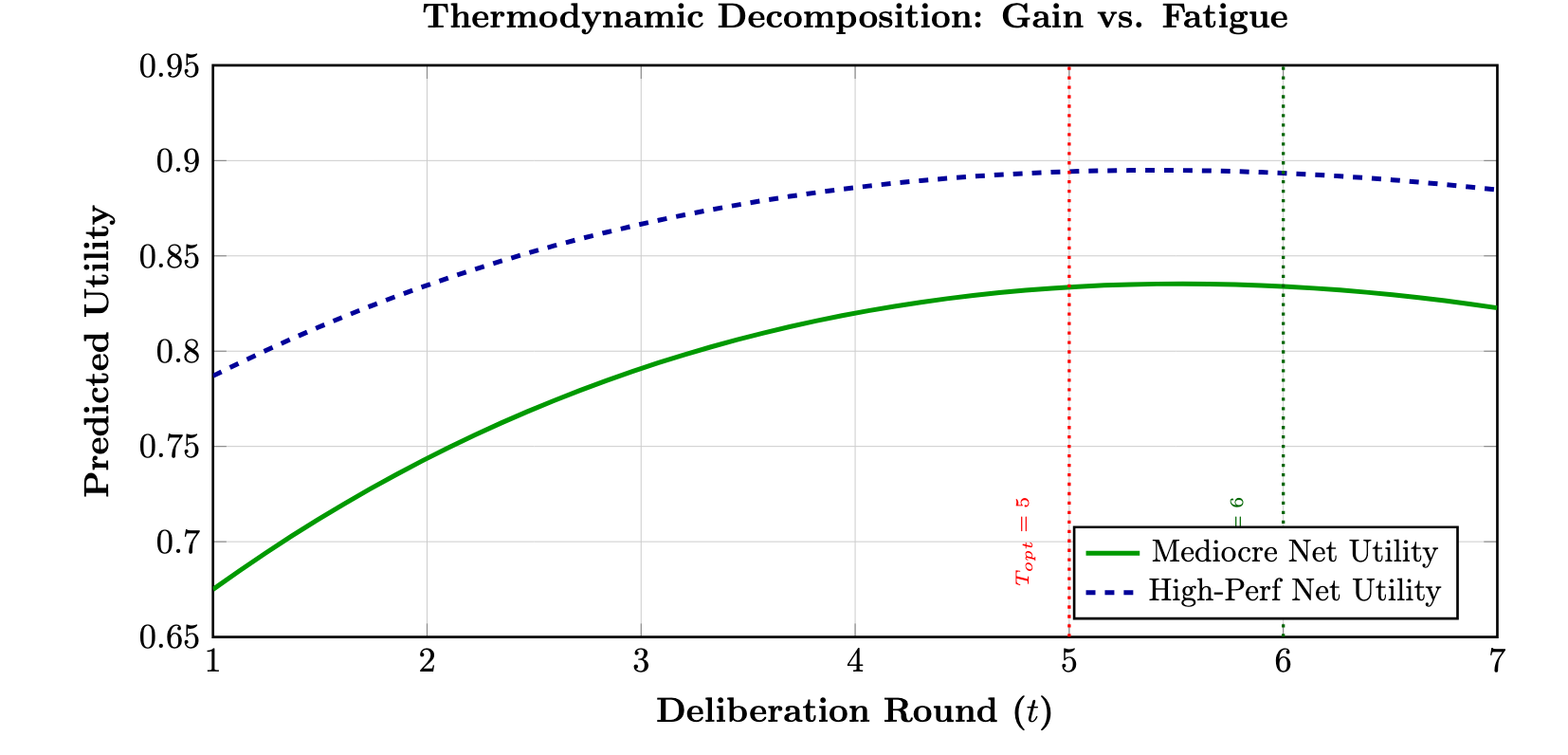

Crucially, we describe a parametric law governing this process: the Efficiency-Fatigue Model.

\begin{equation}U(t) = 1 - (1-p_g)e^{-\Lambda(p_v-p_g)t} - \beta t^2\end{equation}

C-suite Translation: We mathematically found the 'Point of Diminishing Returns' for AI ensembles. NSED stops the model before it starts charging you for hallucinations.

This equation predicts exactly when an AI starts to "overthink." It quantifies the "Laziness Tax" inherent in standard models. By applying Diagonal Masking—where agents are blinded to their own identity—we force a "cooling" effect that delays this fatigue, allowing our swarm to extract higher fidelity signals from smaller models than was previously thought possible.

We aren't just generating text; we are engineering certainty.

The Hardware Arbitrage: 10x Lower CAPEX

The most radical implication of NSED is economic. It breaks the "Interconnect Wall".

Stop sending your IP to the cloud because you can't afford the hardware. With NSED, the hardware is already in your office.

Standard enterprise clusters rely on Tensor Parallelism, which requires expensive NVLink cables to move massive gradients between GPUs in microseconds. NSED uses Semantic Parallelism. Our agents communicate via natural language text, which is lightweight enough to run on standard PCIe or Ethernet.

This allows us to replace the Monolith with the Swarm.

We are essentially executing a "Hardware Arbitrage." We use the hardware you can actually buy (consumer GPUs) to deliver the intelligence you can't afford (enterprise reasoning).

This protocol is not exclusive to new hardware. By leveraging Semantic Parallelism, NSED can substantially help ecology, as we learn to retrofit older generation hardware. Yes, P40 Teslas you're thinking of - they might get a second chance.

From Chatbot to Mission Control

NSED is not a chatbot; it is a deliberation engine. This shift necessitates a new relationship with latency.

The Trade-off: Time for Truth Because NSED agents debate, critique, and refine answers over multiple rounds, generation times can be 2x to 12x longer than a standard API call. We do not optimize for speed; we optimize for rigor.

The "Glass Box" Interface To make this wait valuable, we are completely rethinking the UX.

- The Mission Controller: You don't just stare at a blinking cursor. You watch the "Influence Matrix" in real-time, seeing exactly which agent proposed a solution and which agent (the Critic) shot it down.

- Steerability: Because the reasoning happens in discrete rounds, you have a Human-In-The-Loop steering wheel. You don't need to interrupt to intervene. Instead you steer the swarm between though cycles to inject a correction.

The Fallacy of "Infinite Context"

The industry tells you that "10 Million Tokens" is the future. They don't tell you the cost.

Monolithic models suffer from Quadratic Complexity: doubling the context length quadruples the compute cost and degrades reasoning accuracy (the "Lost in the Middle" phenomenon). You are paying more to make the model dumber.

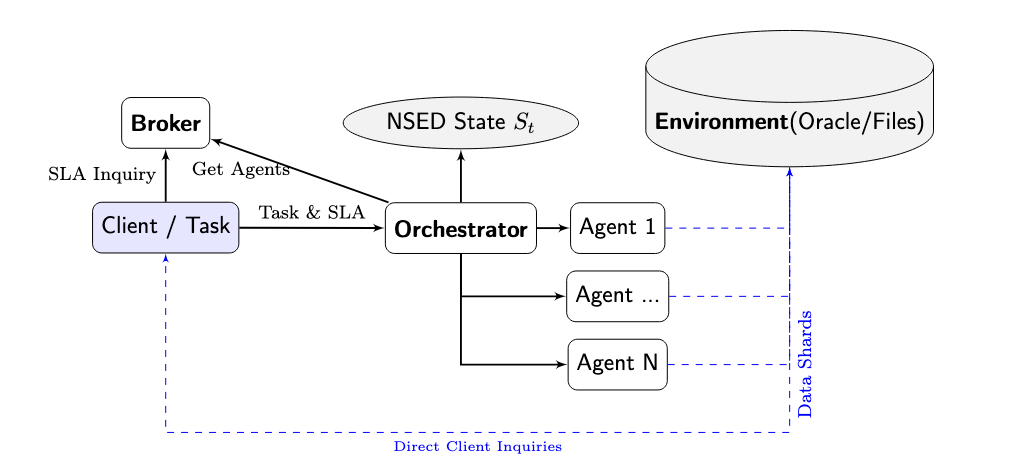

Our Agentic Oracle Topology NSED extension, enables agents maintain two distinct interfaces: a Protocol Interface to the Orchestrator for peer-review, and a Side-Channel to the Environment for independent context retrieval.

This decouples retrieval (individual) from reasoning (collective).

Instead of forcing one model to remember 10,000 files, NSED acts as a Semantic File System.

- Agent A loads the Database Schema.

- Agent B loads the API Routes.

- Agent C loads the Security Protocols.

They debate the code in real-time without ever needing to fit the entire repository into a single VRAM envelope. The result is Linear Scaling. As we ramp up scale & infra, you will be able to audit a 100GB repository with the same hardware you use for a 10MB script

What NSED Engine is good for?

NSED is general reasoning orchestration layer. Here is summary of high level features:

- Higher Accuracy: ~20% gain in benchmarks on complex multi-step reasoning tasks vs bare agent models.

- Provably Less Bias: ~40% less sycophantic in our benchmarks using the DarkBench suite.

- More Transparent: No opaque "Aggregator" model. Your audit trail gets the full plurality of options.

- Steerable: Native Human-in-the-Loop control plane without interrupting the process.

- Decentralised: There is no central hidden secret layer to form a final result - you can verify all steps.

Primary Use Cases

1. Supply Chain Management: Logistics is a "High Entropy" domain—lots of noise, incomplete data. Standard LLMs hallucinate when data is missing. NSED’s iterative loop allows it to ask clarifying questions or flag anomalies with higher confidence. It allows multi-party collaboration on proprietary data, forming documents through deliberation without revealing context data to any central node.

2. Code Reasoning: Imagine plugging a specialized "Solidity Auditor" or "Legal Discovery" module into the NSED loop. You get domain-specific, SOTA reasoning without the data risk of the cloud. This is the end of the "Audit-Once" era. With NSED, you run a Continuous Adversarial Defense swarm that hunts for bugs 24/7.

3. Scientific Research & Drug Discovery: Modern science is bottlenecked by human bandwidth. NSED acts as a Force Multiplier for Discovery, moving beyond simple text generation into complex simulation. Whether functioning as an Autonomous Peer Review Ring that dissects pre-prints for p-hacking, or running "Virtual Phase 0" drug trials where Chemist and Toxicologist agents debate a molecule's viability in-silico, the swarm trades computational speed for the mathematical rigor required to filter out expensive failures before they ever reach the wet lab.

4. Modular AI Safety: Current safety measures are trained into the model, making them hard to update. NSED decouples safety. You can hot-swap a "Safety Adapter" agent into the loop to enforce specific compliance rules (e.g., GDPR, HIPAA, or Corporate Brand Voice) without retraining the base models. If the generating agent creates toxic content, the safety agent catches it during the deliberation phase—before it ever reaches the user.

5. Quantitative Finance & Alpha Decay Analysis In trading, "hallucination" means losing money. NSED’s Thermodynamic Confidence Score provides a critical meta-metric for quant funds. Instead of just generating a trading signal ("Buy Apple"), the swarm generates a signal and a stability score based on how hard the agents had to debate to reach that conclusion. High entropy during the debate? Low confidence signal. This allows funds to filter out "lucky guesses" and trade only on high-certainty structural logic.

6. The Sovereign Home & SME: The "Memory Wall" hurts your living room as much as the datacenter. NSED unifies "idle compute" (Gaming PCs, Mac Studios, NAS) into a Household Intelligence Server. A "Private Banker" or "Health Guardian" can live in your house, analyzing medical records and financial history locally, representing your intellectual property context in deliberation with remote agents without ever sending a single byte of private data to the cloud.

7. Industrial Mesh, Edge AI & IoT: For industrial SMEs and Agriculture, the cloud is too far away. NSED running on Edge Clusters—groups of distributed reasoning capacities is perfect to join forces and solve challenge beyond individual edge device capacity.

Decentralised Reasoning API

Today, we are opening the waitlist for the API layer for World’s First Decentralized Reasoning Exchange that implements NSED protocol.

We don't just provide tokens; we provide consensus-verified intelligence

Our vision is to build an Expert Marketplace. We are committed not to just build orchestration layer, but rather to bridge the gap to SOTA performance trough community owned library of modular Expert Adapters that become part of something bigger. We have many updates coming up, stay tuned!

NSED API is offered as subscription with bring-your-own-keys and zero data retention policies. For data-sensitive, high-stake industries, we offer an option to license the binary and self-host a reasoning node.

Read the full NSED technical paper: https://arxiv.org/abs/2601.16863